As developers of machine learning medical imaging applications, we are often asked: “Why can’t we just have AI read a chest X-ray and classify whether it is normal or not?” How hard could that be? As it turns out, since no one has an FDA-cleared algorithm that can do this yet, it might be harder than it appears. This article explains some of the challenges and what it will take to bring this time-saving innovation into clinical practice.

Challenges with Publicly Available CXR Datasets

Access to data plays an important role in developing deep learning models that can be used to detect and diagnose thoracic diseases. AI models need to be trained using quality images to avoid “garbage-in, garbage-out.”

In 2018, RSNA announced the Pneumonia Detection Challenge, calling participants to build an AI algorithm to automatically locate lung opacities on CXR images. To support the Kaggle competition, the US National Institutes of Health provided a large publicly available chest X-ray dataset, Chest X-ray 14 (CXR14). The dataset contained a total of 108,948 frontal view CXR images from 32,717 patients.

CXR14 raised concerns in the AI community regarding the labeling and imaging quality of the dataset. The dataset contained images from patients who underwent over 10 scans, contributing to overlapping between images and subject level variability. The labels were also extracted from free-form radiology reports using a Natural Language Processing tool that contributed potential errors from both the extraction process and the challenge of using text to describe images. As a result of the quality of the dataset, CXR14 was deemed unfit for training AI algorithms.

Additional datasets have been released to the public including MIMIC Chest X-ray, the DeepLesion dataset, and the Lung Image Database Consortium. While each of these resources comes with its own limitations and quality issues, these data collectively represent an unprecedented opportunity.

Autonomous AI and the FDA

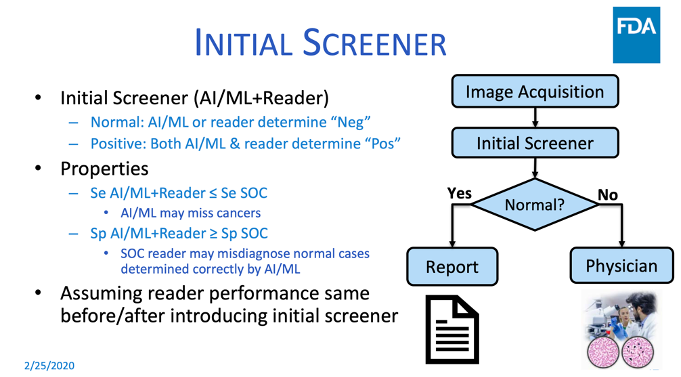

During the Food & Drug Administration’s Public Workshop on the Evolving Role of Artificial Intelligence in Radiological Imaging, Dr. Nicholas Petrick, PhD, of the FDA discussed pre-market and post-market evaluation of autonomous AI and machine learning, and shared lessons from the Agency’s experience regulating CAD devices. On one of his slides, shown below, he describes a very similar scenario to the one we are discussing–having AI screen out normal exams, leaving the abnormal exams for human interpretation.

Dr. Petrick noted that the history of FDA review of CAD devices goes back to an automated cervical cytology slide reader that selects up to 25% of Pap smear slides that need no further review. He asserted that the basic principles for their review continue to apply to autonomous AI and machine learning submissions to the FDA, with additional considerations, such as the ability for comparisons of algorithms across different datasets. He also noted the usual generalizability considerations such as patient population representativeness and clinical image acquisitions, but with more sites to minimize potential selection bias. Enriched datasets may be required to inform labeling and to determine algorithm limitations and generalizability analysis. Dr. Petrick used an example of an automated Pap smear reader device that uses a two-arm prospective intended use study at five cytology laboratories. The bar is pretty high, as it should be, given the risk of making a false positive error on “normal.”

During the February FDA workshop, the Agency convened a panel to discuss the use of autonomous or semi-autonomous AI systems in clinical settings. Autonomous AI systems could make mistakes that may result in patient harm and lead to challenges in terms of who would be responsible for an AI misdiagnosis.

To overcome these challenges, the panel concluded that considerations need to be made for the safety of the user and the patient. Bringing autonomous systems into clinical settings will likely require consistent monitoring and evaluation. Panelists also suggested the need for algorithms to be evaluated by a third-party to compare algorithms based on a standard definition.

The possibility of using AI to screen normal X-ray exams from abnormal ones is technically feasible. To reduce risk the training and validation datasets may require very large numbers of exams for which the ground truth is highly reliable. The datasets must be diverse in many dimensions such as demographics, hardware, and acquisition techniques. This may also require a dual-armed randomized prospective clinical trial to demonstrate clinical effectiveness. These efforts are not necessarily difficult, but they can be expensive. However, the economic benefit of eliminating the need for human radiologists to read millions of scans will be substantial, perhaps justifying the investment. To get there requires collaboration between healthcare delivery organizations and a qualified developer of medical imaging AI products. We welcome discussions for such a collaboration.

This resource was first published prior to the 2020 rebranding of CuraCloud to Keya Medical. The content reflects our legacy brand.

Recent Comments